Research

Research Overview

CiPH3R-Lab focuses on secure and trustworthy cybersecurity and AI/ML applications, with emphasis on privacy-preserving learning, adversarial machine learning, and critical infrastructure security. Our work spans federated learning, reinforcement learning, blockchain, and cyber-physical systems (CPS), with the goal of building systems that remain reliable under realistic attacks and operational constraints.

Core Research Thrusts

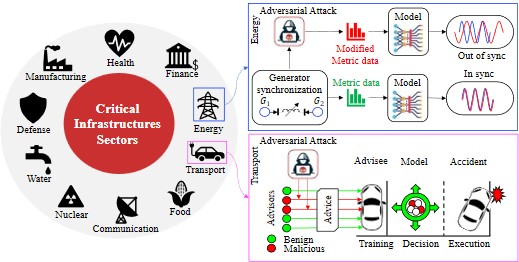

1) Trustworthy AI/ML for Critical Infrastructure Security

- Secure and trustworthy AI/ML applications for real-world cyber physical critical infrastructure systems

- Resilience, adaptation, and secure decision-making in distributed agents

- Risk assessment and standards-aligned security analysis (e.g., NIST/ISO practices)

2) Adversarial Machine Learning

- Model poisoning attacks and defenses

- Evasion-resilient adversarial strategies in learning systems

- Adversarial dynamics in multi-agent Reinforcement Learning (RL) and experience sharing

- Adaptive and stealthy model poisoning in Federated Learning (FL)

- Defensive strategies against Byzantine/adversarial clients

3) Privacy-Preserving Learning

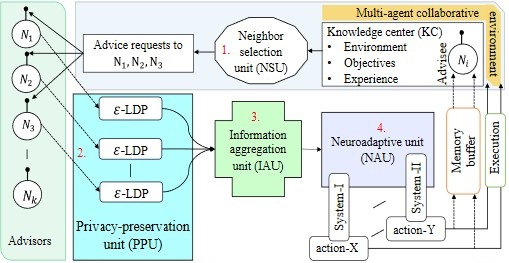

- Differential Privacy (DP) and Local Differential Privacy (LDP) for multi-agent and distributed settings

- Privacy–utility–security tradeoff analysis for operational deployments

- Lightweight, scalable consensus mechanisms for constrained operational environments using Blockchain

- Integrity and auditability for infrastructure monitoring and control

Ongoing Projects

Project-1: Zen-AI: Zero-day-aware Neurocognitive Decision Making with Agentic AI

A trustworthy neurocognitive decision-support framework for multi-agent critical infrastructures:

- Dual-process architecture (fast heuristic System-I + slow analytic System-II)

- Goal: authentic, accurate, secure, reliable, timely (AASRT) information exchange and adaptive resilience

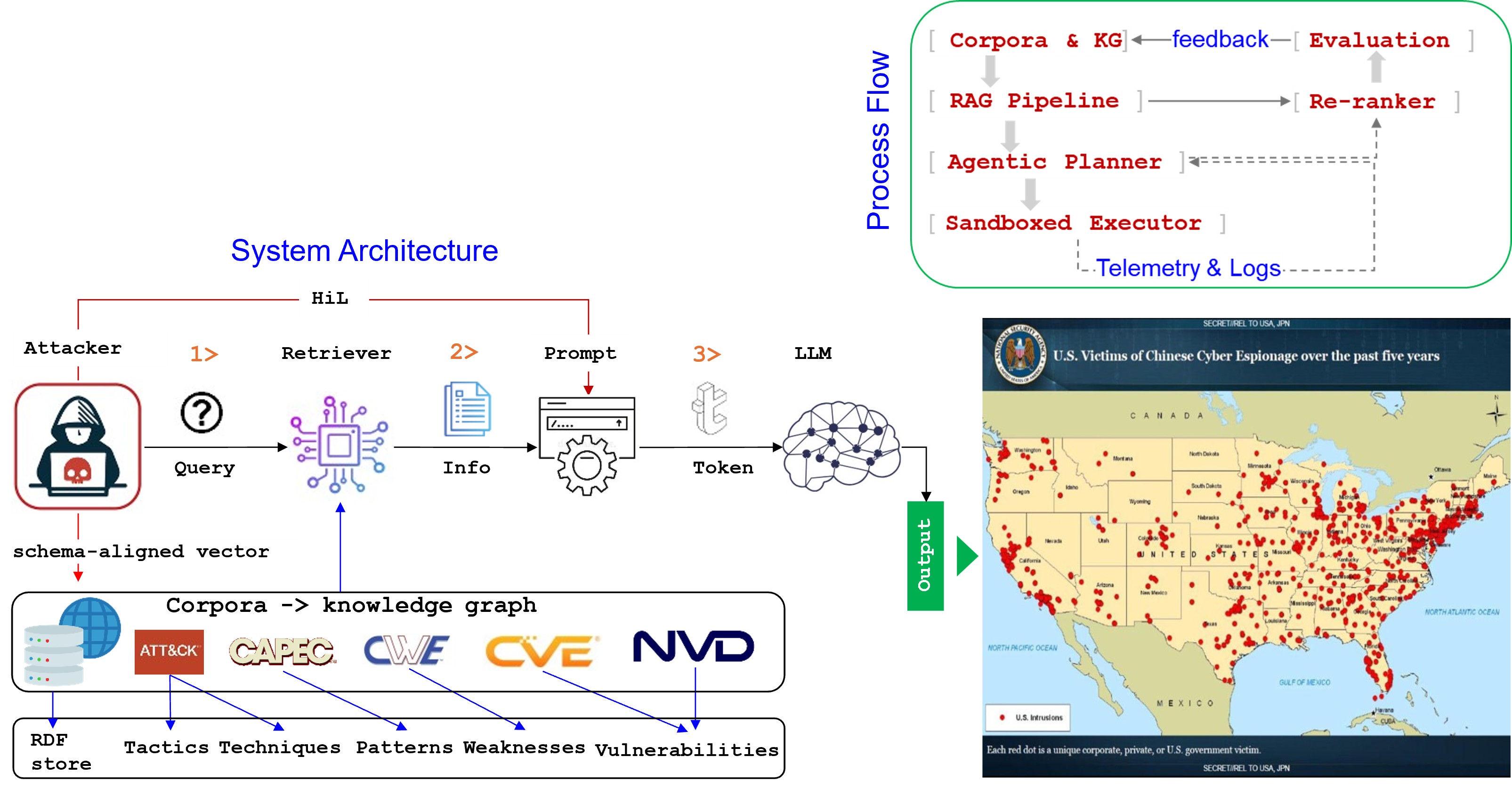

Project-2 RAG-powered Cyber Red Teaming with Human-in-the-Loop (HiL)

RAG-based agentic LLM approach to emulate attacker workflows safely:

- Leverages MITRE ATT&CK/CAPEC concepts and structured knowledge from CVE/CWE/NVD-style corpora

- Operates in sandboxed environments with HiL oversight

- Goal: quantify how agentic AI accelerates adversary workflows and derive defense-in-depth mitigations

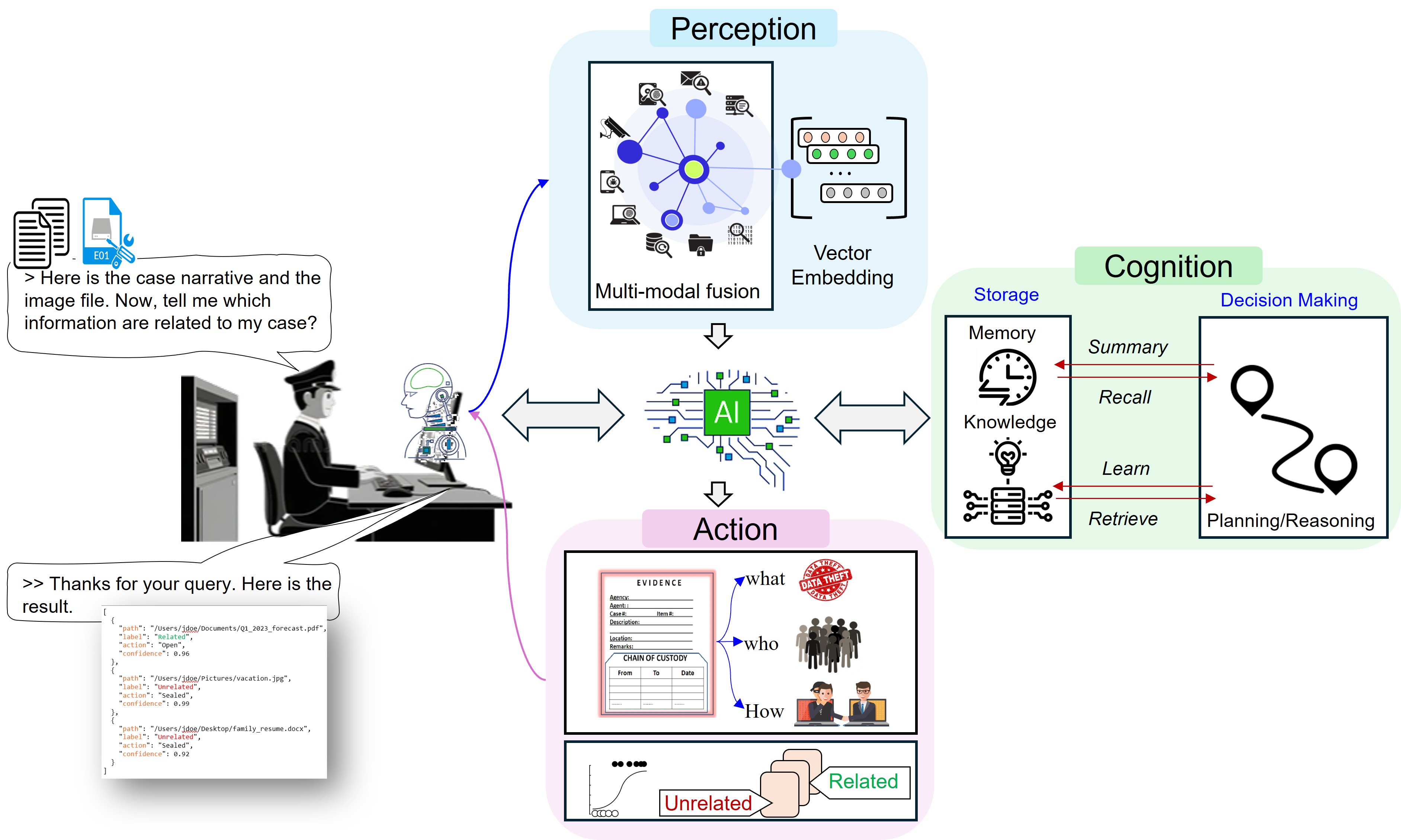

Project-3: PRISM: Policy-Regulated Investigative Segmentation for Ethical Digital Forensics

Ethics-first agentic AI to triage large evidence sets (disks, memory dumps, logs, PCAP, mobile images):

- Contextualizes case narratives and groups/tags artifacts as case-related vs unrelated

- Goal: reduce unintentional/unethical data exposure while preserving investigator speed

Funding & Proposal Activity

- PRISM: Policy-Regulated Investigative Segmentation for Ethical Cybercrime Forensics (submitted for Amazon Research Award 2026)

- Jag-AI: AI for All JAGUARS (Submitted for FIPSE-SP program by U.S. Department of Education; multi-PI team)

- Zen-AI (In preparation for Texas A&M System Research Excellence Fund (REF))

- Framework-Aware API Misuse Detection in Cross-Platform Mobile Apps (In preparation for Texas A&M System Research Excellence Fund (REF))

- RAG-powered Cyber Red Teaming with Human-in-the-Loop (HiL) (In preparation for Secure AI Grant, Foresight Institute)

Collaborations

We collaborate with researchers and faculty across institutions including:

- Purdue University Northwest (PNW)

- University of Nevada, Reno (UNR)

- Texas A&M University–Kingsville (TAMUK)

Interested in Joining?

We welcome motivated students interested in:

- Adversarial ML, privacy-preserving learning (DP/LDP), federated learning security

- Cyber-physical and critical infrastructure security

- Agentic AI for cybersecurity and digital forensics

Please reach out via the Contact page with your interests and background.